Since they are my favourite, let’s learn something neat about matrices, something that can also serve as the first post on this new blog of mine about math, programming, and DSP.

Matrix Multiplication as a Linear Operator

It turns out that matrix multiplication can be used to perform any linear mathematical operation, and a whole lot of interesting things are linear. Geometrically speaking, scaling, rotation, and skewing are linear operations.

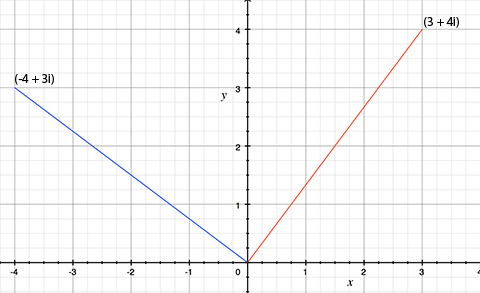

First let’s say we want to model multiplication of two complex numbers by matrices. First we need some complex numbers to multiply, and I happen to like $(3 + 4i) \cdot i$.

So that is pretty easy it is just polynomial multiplication, so we distribute $i$ onto both terms.

Yep, multiplying by $i$ is a rotation $90^{\circ}$ counter clockwise. So anyways the thing I think is cool, is how it can be represented as a matrix multiply instead of looking like a polynomial multiply.

Identity Operation

The identiy matrix, the matrix that if you multiply by it, it is basically a no-op, why does it act like that, and why is it shaped the way it is? Say you have this matrix multiply:

If $c = 1$ then there is your identity operation. But did you ever think:

What are these rows and columns in a matrix really all about?

Say you view that $2x2$ matrix as two unit length column vectors sitting side by side.

The first column $\begin{bmatrix} 1 & 0 \end{bmatrix}^{T}$ and the second column $\begin{bmatrix} 0 & 1 \end{bmatrix}^{T}$ are exactly the basis vectors which define and span $\mathbb{R}^{2}$. Otherwise known as either the x-y or real-imaginary axis.

Change of Basis

So we saw that multiplication by the identity matrix performs no operation at all, because there is just no change to the basis vectors for that space $\mathbb{R}^{2}$. We also saw if we perform a simple change of basis where we scale by $c$, it just scales everything by $c$. The diagonal numbers don’t have to be the same as each other either, if they were different you would get a skewing operation instead of a scaling operation.

Great, so what do the other numbers on the opposite diagonal that have always been $0$ up to this point do? Those numbers let you perform rotations.

Time to Define the $i$ Matrix

If we take the two column matrices we are using as our basis, and rotate them counter clockwise by $90^{\circ}$, which should be easy because they are so simple, we should get the new basis we’re looking for.

You can see how the first column, instead of being a vector pointing horizontally, is now pointing vertically, and how the second column, which used to, by chance be pointing vertically is now pointing horizontally but in the negative direction, each vector is pointing $90^{\circ}$ counter clockwise to where it used to be pointing.

So what that means, is since we’ve rotated each component $90^{\circ}$ anything vector we multiply by $i$ will also rotate in the same way.

Square Root of Negative One

This should totally be true of a matrix that we decide to name $i$, that is $i^2 = -1$, or well it should equal the matrix version of $-1$.

Further proof that this makes any sense:

Just as the old “graph paper” example predicted. So, I am pretty happy with my explaination of this phenomenon. I have to admit I am just getting used to this Mathjax Latex formatting stuff.

Normally I am happy with a code example, so here is a Ruby example of the same thing:

Ruby example

1 2 3 4 5 6 7 8 9 10 11 | |

Other Rotations

We don’t alway want to rotate by $90^{\circ}$, but there is an equation that will let us create a matrix for any arbitrary rotation by $\omega$ radians. And that happens to look like this:

Let’s write a Ruby method to create rotation matrices for us, just by passing an angle in radians.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 | |

Matrix Form

Now that we’ve established all that stuff about complex numbers and matrices works we sort of have a formula for represeenting a complex number as a matrix.

So what happens if we use some other sorta pattern besides that? What if we use some other dimension besides the imaginary dimension? What if that dimension was infinitesimal. Most people learn calculus by learning about limits first, but for some reason I didn’t, I learned on my own, and the first calculus textbook that made sense to me taught derivitives using infinitesimals, it’s really similar in some ways, but revolves around the number $\varepsilon$

Derivitives

Derivitives calculate the slope at one point on a curve, when it takes two actual points to calculate a slope, “Rise over Run” style. Usually you see a formula for derivitive using a variable $h$ or $\Delta x$, and take the limit as it goes to 0

…but in this “style” of calculus, instead of that, you use $\varepsilon$ where $\varepsilon^{2} = 0$. In math, they say that if there is some positive integer $n$ where $x^n = 0$ then you would call $x$ a nilpotent number

So, example:

And that all worked out correctly, because $2x$ is totally the derivitive of $x^{2}$. The key to that working out was that we defined $\varepsilon^{2} = 0$, which is something that totally reminds me of defining $i^{2} = -1$.

Dual Numbers

Like a complex number has the form $a + bi$, a dual number has the form $a + b\varepsilon$. The imaginary number $i$ has magic powers, in that it can magically do rotations that you would normally have to use trigonometry for, but a dual number, has the magic power that it can automatically calculate the derivitive of a function.

All you need to do to simultaneously calculate the value of a function, and its derivitive, is pass the function a value of $x + \varepsilon$, that’s whatever $x$ happens to be + $1\varepsilon$.

Simple example again.

The result of the function is another dual number, the real part of which is $x^{2}$, and the dual part is $2x$, which the value and derivitive at $f(x)$ and $f’(x)$ respectively.

Dual Numbers as Matrices

So just like we can encode a complex number into a 2x2 matrix, we can also encode a dual number in a similar way.

So if we multiply $\varepsilon \cdot \varepsilon$, we should get 0.

Ruby Class for Dual Numbers

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 | |

Conclusion

I dunno, thought that was pretty cool. Actually the more I figure out about matrices the more I am understanding how they can be used to implement any linear operator. Actually the reasoning behind me even caring about matrices or linear operators at all (or math really), is that works together well with my obsession for digital audio filters. Because a digital filter is usually a linear operation, yep, matrices can be used to calculate filters :) Hopefully I will get more into describing how filters work in future blog posts.